This is a multi-part series on my newly created DBA Inventory system. The main page with a table of contents to link to all the posts is located here

The previous post discussed Server Details, things like Memory, CPU’s, Cores, BIOS, Operating System details using WMI Calls. This next post is another poll of Server level (Operating System) type detail… Disk Info.

This Powershell “poller” grabs disk info such as the Drive Letter, the Disk Model, the Partition, a Description, if it’s a Primary Partition, the Volume Name, if it’s SAN attached, if it’s a Mount Point, Disk Size, Disk Free Space, and a Serial Number. It stores all of this in a history table by an “Audit Date”. That table is named “DiskInfo”!

CREATE TABLE [dbo].[DiskInfo]( [ServerName] [varchar](256) NOT NULL, [DiskName] [varchar](256) NOT NULL, [AuditDt] [datetime] NOT NULL, [Model] [varchar](256) NULL, [DiskPartition] [varchar](256) NULL, [Description] [varchar](256) NULL, [PrimaryPartition] [bit] NULL, [VolumeName] [varchar](256) NULL, [Drive] [varchar](128) NULL, [SanAttached] [bit] NULL, [MountPoint] [bit] NULL, [DiskSizeMB] [bigint] NULL, [DIskFreeMB] [bigint] NULL, [SerialNumber] [varchar](128) NULL, CONSTRAINT [PK_DiskInfo] PRIMARY KEY CLUSTERED ( [ServerName] ASC, [DiskName] ASC, [AuditDt] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] ) ON [PRIMARY] GO

The powershell itself actually only queries my Helper Database installed on every instance – named DBATools. Each instance has a job that runs nightly to get the local disk information. I had originally worked on a centralized script that polled via WMI but it was slow and cumbersome to run in my environment. This is much faster. So the central polling script is here, and it’s pretty simple.

[void] [System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.SMO")

if (Get-PSSnapin -Registered -Name SqlServerCmdletSnapin100 -ErrorAction SilentlyContinue )

{

Add-PSSnapin SqlServerCmdletSnapin100 -ErrorAction SilentlyContinue

Add-PSSnapin SqlServerProviderSnapin100 -ErrorAction SilentlyContinue

import-module "C:\DBATools\dbatoolsPost2008.psm1" -ErrorAction SilentlyContinue

}

elseif (Get-Module sqlps -ListAvailable -ErrorAction SilentlyContinue)

{

Import-Module sqlps #-ErrorAction SilentlyContinue

import-module "C:\DBATools\dbatoolsPost2008.psm1" -ErrorAction SilentlyContinue

}

else

{

import-module "C:\DBATools\dbatoolsPre2008.psm1" -ErrorAction SilentlyContinue

}

Import-Module "C:\DBATools\DBATOOLSCommon.psm1"

$dbaToolsServer = "CS-SQL-INFRAMONITORING-US-DEV-001"

$inventoryDB = "Inventory"

Function GetDiskInfo ($serverName, $sourceDB, $altCredential, $runDt)

{

$DiskInfo = New-Object System.Data.DataTable

[void]$DiskInfo.Columns.Add("ServerName", "System.String")

[void]$DiskInfo.Columns.Add("DiskName", "System.String")

[void]$DiskInfo.Columns.Add("AuditDt", "System.DateTime")

[void]$DiskInfo.Columns.Add("Model", "System.String")

[void]$DiskInfo.Columns.Add("DiskPartition", "System.String")

[void]$DiskInfo.Columns.Add("Description", "System.String")

[void]$DiskInfo.Columns.Add("PrimaryPartition", "System.Int32")

[void]$DiskInfo.Columns.Add("VolumeName", "System.String")

[void]$DiskInfo.Columns.Add("Drive", "System.String")

[void]$DiskInfo.Columns.Add("SanAttached", "System.Int32")

[void]$DiskInfo.Columns.Add("MountPoint", "System.Int32")

[void]$DiskInfo.Columns.Add("DiskSizeMB", "System.String")

[void]$DiskInfo.Columns.Add("DiskFreeMB", "System.String")

[void]$DiskInfo.Columns.Add("SerialNumber", "System.String")

$sqlQuery = "

SELECT [ServerName]

,[DiskName]

,[AuditDt]

,[Model]

,[DiskPartition]

,[Description]

,[PrimaryPartition]

,[VolumeName]

,[Drive]

,[SanAttached]

,[MountPoint]

,[DiskSizeMB]

,[DIskFreeMB]

,[SerialNumber]

FROM [DiskInfo]

"

if ($altCredential -ne $null) {

write-host "Using Alt Credentials!"

$user=$altCredential.UserName

$pass = $altCredential.GetNetworkCredential().Password

$splat = @{

UserName = $user

Password = $pass

ServerInstance = $serverName

Database = $sourceDB

Query = $sqlQuery

}

$diskQuery = Invoke-SqlCmd @splat

} else {

$diskQuery = QuerySql $servername $sourceDB $sqlQuery 60000

}

foreach ($row in $diskQuery)

{

$newRow = $DiskInfo.NewRow()

$newRow["ServerName"] = $row.Servername

$newRow["DiskName"] = $row.DiskName

$newRow["AuditDt"] = [DateTime]$runDt

$newRow["Model"] = $row.Model

$newRow["DiskPartition"] = $row.DiskPartition

$newRow["Description"] = $row.Description

$newRow["PrimaryPartition"] = $row.PrimaryPartition

$newRow["VolumeName"] = $row.VolumeName

$newRow["Drive"] = $row.Drive

$newRow["SanAttached"] = $row.SanAttached

$newRow["MountPoint"] = $row.MountPoint

$newRow["DiskSizeMB"] = $row.DiskSizeMB

$newRow["DiskFreeMB"] = $row.DiskFreeMB

$newRow["SerialNumber"] = $row.SerialNumber

$DiskInfo.Rows.Add($newRow)

}

#$diskInfo

try

{

if ($DiskInfo.Rows.Count -gt 0) {

$cn = new-object System.Data.SqlClient.SqlConnection("Data Source=$dbaToolsServer;Integrated Security=SSPI;Initial Catalog=$inventoryDB");

$cn.Open()

$bc = new-object ("System.Data.SqlClient.SqlBulkCopy") $cn

$bc.DestinationTableName = "DiskInfo"

$bc.WriteToServer($DiskInfo)

$cn.Close()

}

}

catch [System.Exception]

{

$errVal = $_.Exception.GetType().FullName + " - " + $_.FullyQualifiedErrorID

Write-Host "Insert Error Error $serverName"

Write-Host $errVal

$errCount ++

}

}

###########################################################################################

#

# MAIN

#

###########################################################################################

$startDate = get-date

$runDt = Get-Date -format "yyyy-MM-dd HH:00:00"

#Get-ClusteredDiskInfo "NAT317DBS" $runDt

#EXIT

$sqlQuery = @"

SELECT [InstanceName] = ID.InstanceName,

[DBAToolsDB] = DD.DatabaseName,

[UName] = AC.Uname,

[PWord] = AC.PWord

FROM InstanceDetails ID

LEFT OUTER JOIN DatabaseDetails DD

ON ID.InstanceName = DD.InstanceName

LEFT OUTER JOIN [AltSqlCredentials] AC

ON ID.InstanceName = AC.instancename

and AC.[SourceServerNetBIOS] = (SELECT SERVERPROPERTY('ComputerNamePhysicalNetBIOS'))

WHERE ID.Retired = 0

AND ID.LicenseOnly = 0

AND DD.DatabaseName like 'DBATools%'

"@

$clusteredServers = QuerySql $dbaToolsServer $inventoryDB $sqlQuery 60000

foreach ($instance in $clusteredServers)

{

$instanceName = $instance[0]

$sourceDB = $instance[1]

$user = $instance[2]

$pass = $instance[3]

$altCredential = $null

if (-not(([DBNull]::Value).Equals($user)) ) {

write-host "Found alt credential for $instanceName"

$pass = $pass|ConvertTo-SecureString

$altCredential = New-Object -TypeName System.Management.Automation.PsCredential `

-ArgumentList $user, $pass

}

$instanceName

GetDiskInfo $instanceName $sourceDB $altCredential $runDt

}

$endDate = get-date

$sqlQuery = "INSERT INTO MonitoringJobStatus

(JOBNAME, STATUS, STARTDT, ENDDT, RANGESTARTDT, RANGEENDDT, ERROR)

VALUES

('DISKINFO','C','$startDate', '$endDate', '', '', '')"

QuerySql $dbaToolsServer $inventoryDB $sqlQuery 60000

exit

This query gets the list of instances to poll and also introduces the concept of a SQL Login credential that’s stored as a Secure String to use a SQL Login to connect rather than the AD Credential (for servers not joined to the domain).

$sqlQuery = @"

SELECT [InstanceName] = ID.InstanceName,

[DBAToolsDB] = DD.DatabaseName,

[UName] = AC.Uname,

[PWord] = AC.PWord

FROM InstanceDetails ID

LEFT OUTER JOIN DatabaseDetails DD

ON ID.InstanceName = DD.InstanceName

LEFT OUTER JOIN [AltSqlCredentials] AC

ON ID.InstanceName = AC.instancename

and AC.[SourceServerNetBIOS] = (SELECT SERVERPROPERTY('ComputerNamePhysicalNetBIOS'))

WHERE ID.Retired = 0

AND ID.LicenseOnly = 0

AND DD.DatabaseName like 'DBATools%'

"@

The function GetDiskInfo just creates a new data table, queries the local DBATools..DiskInfo table and uses the credentials if they exist. It then bulk uploads that data into the Inventory table.

The local DiskInfo table is identical, its just in the DBATools database on every instance. So let’s look at the Powershell script that’s on each local server as part of the DBATools installation.

#

# GetDiskSanInfo.ps1

#

# This script determines for SAN that is attached whether

# it is a mount point and whether it is replicated.

#

# DiskExt.exe is used to determine which physical disk a mount point resides on.

# inq.wnt.exe is used to determine whether a given SAN volume is replicated.

#

# The dbo.DiskInfo table in DBATools is updated with this information. From

# there the DB Inventory service will read it and update the DBInv database.

#

if (Get-PSSnapin -Registered -Name SqlServerCmdletSnapin100 -ErrorAction SilentlyContinue )

{

Add-PSSnapin SqlServerCmdletSnapin100 -ErrorAction SilentlyContinue

Add-PSSnapin SqlServerProviderSnapin100 -ErrorAction SilentlyContinue

import-module "C:\DBATools\dbatoolsPost2008.psm1" -ErrorAction SilentlyContinue

}

elseif (Get-Module sqlps -ListAvailable -ErrorAction SilentlyContinue)

{

Import-Module sqlps -ErrorAction SilentlyContinue

import-module "C:\DBATools\dbatoolsPost2008.psm1" -ErrorAction SilentlyContinue

}

else

{

import-module "C:\DBATools\dbatoolsPre2008.psm1" -ErrorAction SilentlyContinue

}

Import-Module "C:\DBATools\DBATOOLSCommon.psm1"

$scriptPath = split-path -parent $MyInvocation.MyCommand.Definition

$dbaToolsServer = "CS-SQL-INFRAMONITORING-US-DEV-001"

$SQLName = $args[0]

if ($SQLName -ne $null)

{ $dbaToolsServer = $SQLName }

if (Get-Module FailoverClusters -ListAvailable -ErrorAction SilentlyContinue)

{

Import-Module FailoverClusters

$clusterName = GET-Cluster

$physicalName = $clusterName.Name.toUpper()

} else {

$physicalName = (hostname).toUpper()

}

$runDt = Get-Date -format "MM/dd/yyyy HH:00:00"

# this is the table that will hold the data during execution

$serverDisk = New-Object System.Data.DataTable

[void]$serverDisk.Columns.Add("ServerName", "System.String")

[void]$serverDisk.Columns.Add("DiskName", "System.String")

[void]$serverDisk.Columns.Add("AuditDt", "System.DateTime")

[void]$serverDisk.Columns.Add("Model", "System.String")

[void]$serverDisk.Columns.Add("DiskPartition", "System.String")

[void]$serverDisk.Columns.Add("Description", "System.String")

[void]$serverDisk.Columns.Add("PrimaryPartition", "System.Boolean")

[void]$serverDisk.Columns.Add("VolumeName", "System.String")

[void]$serverDisk.Columns.Add("Drive", "System.String")

[void]$serverDisk.Columns.Add("SanAttached", "System.Boolean")

[void]$serverDisk.Columns.Add("MountPoint", "System.Boolean")

[void]$serverDisk.Columns.Add("DiskSizeMB", "System.Int64")

[void]$serverDisk.Columns.Add("DiskFreeMB", "System.Int64")

[void]$serverDisk.Columns.Add("SerialNumber", "System.String")

# WMI data

$wmi_diskdrives = Get-WmiObject -Class Win32_DiskDrive

$wmi_mountpoints = Get-WmiObject -Class Win32_Volume -Filter "DriveType=3 AND DriveLetter IS NULL AND NOT Name like '\\\\?\\Volume%'" | Select $WMI_DiskMountProps

$AllDisks = @()

$DiskElements = @('ComputerName','Disk','Model','Partition','Description','PrimaryPartition','VolumeName','Drive', 'SanAttached', 'MountPoint', 'DiskSize','FreeSpace','DiskType','SerialNumber')

foreach ($diskdrive in $wmi_diskdrives)

{

$partitionquery = "ASSOCIATORS OF {Win32_DiskDrive.DeviceID=`"$($diskdrive.DeviceID.replace('\','\\'))`"} WHERE AssocClass = Win32_DiskDriveToDiskPartition"

$partitions = @(Get-WmiObject -Query $partitionquery)

foreach ($partition in $partitions)

{

$logicaldiskquery = "ASSOCIATORS OF {Win32_DiskPartition.DeviceID=`"$($partition.DeviceID)`"} WHERE AssocClass = Win32_LogicalDiskToPartition"

$logicaldisks = @(Get-WmiObject -Query $logicaldiskquery)

foreach ($logicaldisk in $logicaldisks)

{

$newRow = $serverDisk.NewRow()

$newRow["ServerName"] = $physicalName

$newRow["DiskName"] = $logicalDisk.Name

$newRow["AuditDt"] = [DateTime]$runDt

$newRow["Model"] = $diskDrive.Model

$newRow["DiskPartition"] = $partition.Name

$newRow["Description"] = $partition.Description

$newRow["PrimaryPartition"] = $partition.PrimaryPartition

$newRow["VolumeName"] = $logicalDisk.volumeName

$newRow["Drive"] = $diskDrive.Name

$newRow["SanAttached"] = if ($diskdrive.Model.StartsWith("NETAPP")) { $TRUE } else { $FALSE }

$newRow["MountPoint"] = $FALSE

$newRow["DiskSizeMB"] = [math]::truncate($logicalDisk.Size / 1MB)

$newRow["DiskFreeMB"] = [math]::truncate($logicalDisk.FreeSpace / 1MB)

$newRow["SerialNumber"] = $diskDrive.serialNumber

$serverDisk.Rows.Add($newRow)

}

}

}

# Mountpoints are weird so we do them seperate.

if ($wmi_mountpoints)

{

foreach ($mountpoint in $wmi_mountpoints)

{

$newRow = $serverDisk.NewRow()

$newRow["ServerName"] = $physicalName

$newRow["DiskName"] = $mountpoint.Name

$newRow["AuditDt"] = [DateTime]$runDt

$newRow["Model"] = 'Mount Point'

$newRow["DiskPartition"] = $DBNULL

$newRow["Description"] = $mountpoint.Caption

$newRow["PrimaryPartition"] = $FALSE

$newRow["VolumeName"] = $mountpoint.Caption

$newRow["Drive"] = [Regex]::Match($mountpoint.Caption, "(^.:)").Value

$newRow["SanAttached"] = $TRUE

$newRow["MountPoint"] = $TRUE

$newRow["DiskSizeMB"] = [math]::truncate($mountpoint.Capacity / 1MB)

$newRow["DiskFreeMB"] = [math]::truncate($mountpoint.FreeSpace / 1MB)

$newRow["SerialNumber"] = $DBNULL

$serverDisk.Rows.Add($newRow)

}

}

$sqlCmd = "Select name from sys.databases where name like 'DBATools%'"

$dbatoolsDBName = QuerySql $dbaToolsServer "master" $sqlCmd

$DBAToolsDB = $dbatoolsDBName[0]

$sqlCmd = "TRUNCATE TABLE dbo.DiskInfo"

QuerySql $dbaToolsServer $DBAToolsDB $sqlCmd

# create the connection for the bulk loads

$connString = "Data Source=$dbaToolsServer; Initial Catalog=$DBAToolsDB; Integrated Security=True; Application Name=GetSanDiskInfo.ps1; "

$sqlConn = New-Object System.Data.SqlClient.SqlConnection $connString

$sqlConn.Open()

try

{

# upload the table to DBATools

$bulkCopy = New-Object System.Data.SqlClient.SqlBulkCopy($sqlConn)

$bulkCopy.DestinationTableName = "dbo.DiskInfo"

$bulkCopy.WriteToServer($serverDisk)

}

catch [System.Exception]

{

$errVal = $_.Exception.GetType().FullName + " - " + $_.FullyQualifiedErrorID

Write-Host "Bulkcopy Error"

Write-Host $errVal

$errCount ++

}

Very similar as it creates a data table for each disk row. It also imports FailoverClusters to get the cluster name (in the case of a cluster, it stores all the disks under the Cluster Name) It then queries via WMI out to WIN32_DiskDrive and then to Win32_Volume. The filter on the Win32_Volume limits to just mount points via -Filter “DriveType=3 AND DriveLetter IS NULL AND NOT Name like ‘\\\\?\\Volume%'”.

It then uses some WMI Magic of “ASSOCIATORS OF {Win32_DiskDrive} by device ID” to get partitions, and another “ASSOCIATORS OF {Win32_DiskPartition} by device ID” to get the logical disks. This is the part that really slowed down running this from a centralized location, and forcing me to run it locally on each server.

I do some assumptions here, with the SanAttached value, if the Drive model starts with “NETAPP”, I assume it’s SAN Attached. I only have Netapp arrays in the company, so I can make that assumption. I’m sure there would be additional models, for EMC, 3Par, Dell, etc… but this works for my environment today.

I do a separate loop to load Mountpoints into the data table, and always assume they are SAN Attached.

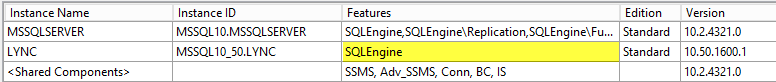

So, if you’re astute, you’ll notice or think to yourself, if I have multiple instances on the same server, each of those DBATools databases will have a DiskInfo table and the same information in it. And then my centralized polling powershell will pull those and load them into the Inventory database…. but that table has a Primary Key on the ServerName, DiskName, and AuditDt. And you’re right it is the physical server name, or in the case of a cluster, the Virtual Computer Name of the Cluster. What I do is discard the duplicates – who needs them? Why write a bunch of logic to check for it, and not poll it, or whatnot, let the Constraint of the Clustered Primary Key do the work and disallow dupes. For example, I have one two node “active/passive” cluster that has seven instances on it. Each instance has a DBATools database, and each instance runs the local powershell script and loads the disk info for the cluster into each of the seven DBATools databases. The centralized polling script connects to each of those seven instances and attempts to load the same data seven times. It discards six of them – big deal, as long as I get one that’s all that matters…. I now have a list of all disks, volumes, mount points, total space and free space for every server that I have in my Inventory, and I have it every day. I can now trend growth rate at the server / disk layer over time.

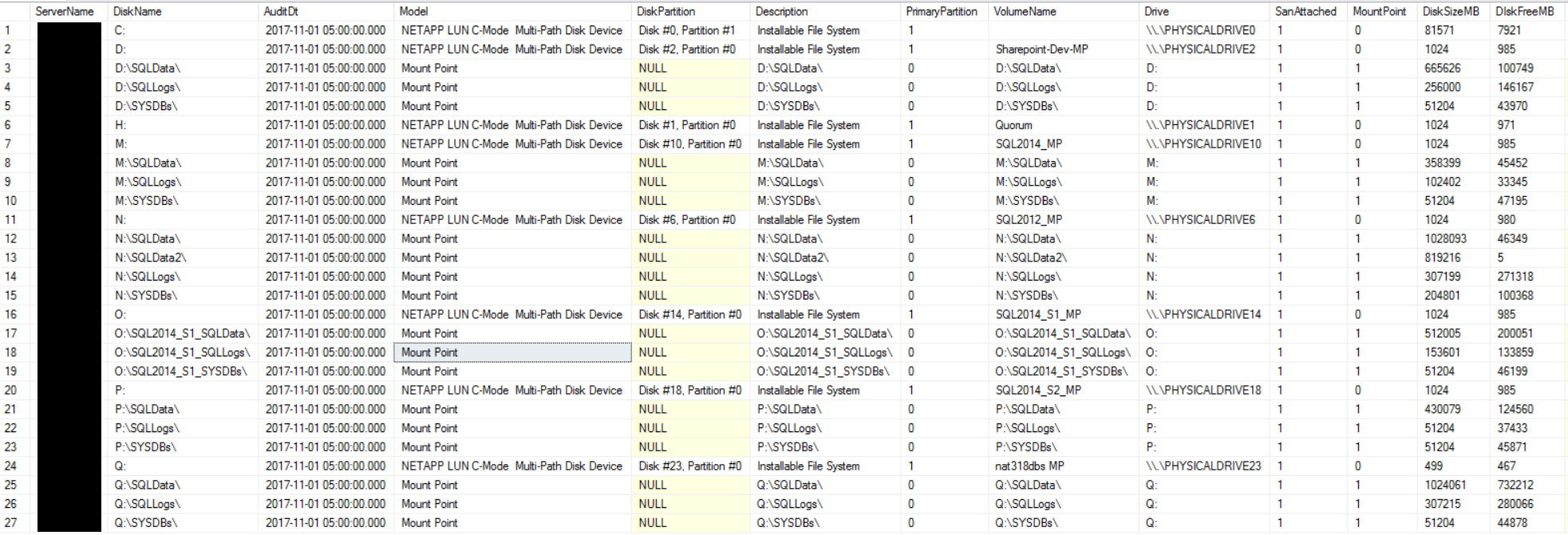

Here’s the sample data from a single cluster of mine, with six instances on it (I group instances on a drive letter with three mountpoints under it, one for User Database Datafiles, one for User Database Tran Logs, and one for System DB’s / instance root). Just think of the trending and PowerBI charts you could make with a year’s worth of data from every SQL Server in your environment!